Lessons Learned in the CIPS Curriculum Project

Pat Heller

This working paper outlines some lessons learned in the first 2.5 years of the CIPS Project (Constructing Ideas in Physical Science — A Middle School Physical Science Curriculum) funded by the National Science Foundation. The purpose of the project is to develop and field test an 8th grade physical science curriculum based on the Project 2061 Benchmarks for Science Literacy and Instructional Criteria. The project is a collaboration of three universities, with four co-project directors; two from San Diego State University (Sharon Bendall and Fred Goldberg), one from the University of Minnesota (Pat Heller), and one from Western Michigan University (Robert Poel). In addition, each site started with at least two experienced, outstanding middle-school teacher collaborators.

The initial proposal included the development of six units:

Unit 1: Introduction

Unit 2: Force and Motion

Unit 3: Light and Color

Unit 4: Current Electricity and Magnetism

Unit 5: The Small Particle Model of Matter

Unit 6: Chemistry

Unit 1 and Unit 5 are being developed at the University of Minnesota; Units 2 through 4 at San Diego State University, and Unit 6 at Western Michigan University.

We made the assumption that research-based curriculum development consists of answering the five questions below, using the best research and materials available. We intended to use the Project 2061 Benchmarks to answer the first question, and the Instructional Criteria to help us develop and implement the answers to the second, fourth, and fifth questions.

- Content and Skills. What should students know and be able to do?

- Prior Knowledge. What content/skills do students start with (prerequisite knowledge that can be built on, missing knowledge and common misconceptions)?

Content

Organization (Scope and Sequence). How should the content/skills be

organized throughout a year long curriculum?

Content

Organization (Scope and Sequence). How should the content/skills be

organized throughout a year long curriculum?

- What content/skills should be the organizing principles?

- What story line can be used to motivate students?

- Which content and skills will be taught within a unit?

- Which content and skills will be taught over many units?

- How will the content be sequenced?

- Pedagogy. How should the content and skills be taught?

- Assessment. How will we know that content and skills have been learned?

- Constraints. What are the real-world constraints?

- Teachers

- Students

- Administrators

- Parents

In the past 2.5 years we have learned many things about developing a curriculum based on the Project 2061 Benchmarks and Instructional Criteria. For the purposes of this conference, the lessons learned are grouped into two broad categories. The first category is what we learned about the long time necessary to develop such a curriculum. Based on our experiences, we recommend that curriculum projects based on the Project 2061 Benchmarks and Instructional Criteria allow at least three years for the development phase — until we have more curricula to use as models and more experienced authors. The second category of lessons learned is the importance of ongoing formative evaluation during the development phase. We recommend that at least two years should be allowed for testing and revising draft versions of the curriculum. Our experiences that lead to these recommendations are summarized below.

What We Learned About Development Time

In our original proposal timeline, the first year included the analysis of the AAAS Project 2061 Benchmarks and Instructional Criteria as well as the development of six draft units. One year was allotted for the testing and revisions of these units by our developer teachers, one year for piloting the curriculum with a small set of new teachers, and two years for field testing. After the first year, however, we knew we would not be able to follow this timeline. The sections below describe what we have learned about understanding the Project 2061 Benchmarks, creating benchmark ideas, and incorporating the Instuctional Criteria into an instructional design.

Creating Physical Science Benchmark Ideas

Only one team member had any prior experience with the AAAS Benchmarks and Instructional Criteria. So after a brief training by one of the Project 2061 staff members, we started our development process with an analysis of the 63 possible Benchmarks we identified in our proposal. First we looked at the 6-8 Benchmarks for physical science content (Chapter 4). We followed the suggested procedure outlined below.

- Identify possible benchmarks (content and skills).

- Determine the meaning (level) of each benchmark.

- Identify knowledge that goes beyond the benchmark.

- Break benchmarks into meaningful "ideas."

- Identify Prior Knowledge.

- Identify prerequisite knowledge (3-5 benchmarks).

- Identify known student difficulties with ideas (from Chapter 13).

- Select Benchmarks for CIPS.

- Determine which content/skills should be organizing principles.

We found the Project 2061 drafts of their strand maps to be very helpful in this procedure.

To our surprise and consternation, however, the analysis of the content benchmarks took over two months! There were three reasons for this process taking so long. First, some benchmarks contain only one or two ideas that are easy to identify, like Benchmark #2 in Table 1 on the next page. Other benchmarks, like Benchmark #4 in Table 1, are very abstract and complex, and contain many, many ideas necessary to make sense of the benchmark. Second, some benchmarks were difficult to interpret, even with the grades 3-5 and 9-12 benchmarks and strand maps. For example, the four benchmarks in Table 1 are from two different sections of Chapter 4. For example, the two benchmarks from Section E (Energy Transformations) seem to contain contradictory statements about the concept of heat — one is that "heat can be transferred through materials by the collisions of atoms, or across space by radiation. . ." and the other that "heat energy is in the disorderly motion of molecules." The first statement implies that "heat" refers to different processes for increasing the temperature of objects (all involving a transfer of energy into an object). The second statement implies that heat is a form of energy that is a characteristic of materials (i.e., the average kinetic energy of molecules). Moreover, it's not clear how the concept of "radiation" or "electromagnetic radiation" should be treated. In Section E (Energy Transformations) radiation is not mentioned in Benchmark #4, which lists different forms of energy, yet it is included in Benchmarks #3 about heat transfer. The concept of radiation is also included in Benchmark #5 in Section F (about motion). We spent many hours discussing energy transfers and transformations and the conservation of energy in both dynamics and thermodynamics. We discovered that as discipline experts, we have a lot of tacit knowledge of what words such as "heat," "energy transfer," and "energy transformation" mean in different contexts. It is very difficult to "unpack" this expert knowledge and develop a sequence of explicit ideas for the energy benchmarks that is understandable and meaningful for middle-school students.

| Table 1. Some 6-8 Benchmarks from Chapter 4 Section 4E. Energy Transformations #3: Heat (energy?) can be transferred through materials by the collisions of atoms, or across space by radiation (is this a form of energy?). If the material is a fluid, currents will be set up in it that aid in the transfer of heat. #4: Energy appears in different forms. Heat energy is in the disorderly motion of molecules; chemical energy is in the arrangement of atoms; mechanical energy is in moving bodies or in elastically distorted shapes; gravitational energy is in the separation of mutually attracting masses. Section 4F. Motion #2: Something can be "seen" when light waves emitted or reflected by it enter the eye- just as something can be "heard" when sound waves from it enter the ear. #5: Human eyes respond to only a narrow range of wavelengths of electromagnetic radiation-visible light. Differences of wavelength within that range are perceived as differences in color. |

The third reason the analysis of the content benchmarks took so long is related to the question of how we should organize and sequence the teaching of the content benchmarks to achieve a coherent curriculum. One of the criticisms of the available science curricula (both textbook and inquiry-based) is that science is taught as a sequence of disconnected topics (e.g., forces and motion, heat and temperature, magnetism, electric circuits, and so on). Major organizing principles of physics/chemistry are not clearly distinguished from specific concepts applicable to particular phenomena. These major organizing principles are also buried within the Benchmarks along with the specific ideas related to different phenomena. After much discussion, we decided that there were four major organizing principles of physics and chemistry to be taught at the middle-school level:

The Conservation of Energy (4E6-8#1). Energy cannot be created or destroyed, but only changed from one form into another.

Newton's Second Law of Motion (4F6-8#3). An unbalanced force acting on an object changes its speed or direction of motion, or both. If the force acts toward a single center, the object's path may curve into an orbit around the center.

The Conservation of Mass (4D6-8#7). No matter how substances within a closed system interact with one another, or how they combine or break apart, the total weight of the system remains the same. The idea of atoms explains the conservation of matter: If the number of atoms stays the same no matter how they are rearranged, then their total mass stays the same.

The Kinetic-molecular Model of Matter (4D6-8#1). All matter is made up of atoms, which are far too small to see directly through a microscope. The atoms of any element are alike but different from atoms of other elements. Atoms may stick together in well-defined molecules or may be packed together in large arrays. Different arrangements of atoms into groups compose all substances.

4D6-8#3. Atoms and molecules are perpetually in motion. Increased temperature means greater average energy of motion, so most substances expand when heated. In solids, the atoms are closely locked in position and can only vibrate. In liquids, the atoms or molecules have higher energy, are more loosely connected, and can slide past one another; some molecules may get enough energy to escape into a gas. In gases, the atoms or molecules have still more energy and are free of one another except during occasional collisions.

We decided to use the concept of interaction as the central theme of CIPS. Scientists take the point of view that events are caused by the interaction of objects. That is, when two objects interact, they act on or influence each other to jointly cause an effect. The usual topics found in physics and chemistry texts at all levels (e.g., force and motion, heat, light and color, current electricity, acids and bases, and so on) usually present the energy and/or force relationships that determine the strength or magnitude of each different type of interaction. This expert approach is not, however, made explicit to either the teachers or the students. We decided that these topics might be more coherent and meaningful for students if each topic was approached explicitly in exactly the same way. That is, each CIPS unit is approached as the investigation of different types of interaction (e.g., light interactions, mechanical interactions, thermal interactions, small particle interactions, and so on). Moreover, whenever appropriate, each interaction is described in terms of both energy and forces.

Table 2. Number of Benchmarks Identified in Each Chapter

| Chapter In Benchmarks |

Number of Benchmarks |

||

|---|---|---|---|

| In Proposal |

After Clarifying Benchmarks & Criteria |

After

2.5 |

|

| Nature of Science |

14 |

2 |

3 |

| Nature of Mathematics |

|||

| Nature of Technology |

3 |

||

| Physical Setting |

19 |

20 |

20 |

| Living Environment |

|||

| Human Organism |

|||

| Human Society |

|||

| Designed World |

|||

| Mathematical World |

6 |

2 |

|

| Historical Perspectives |

2 |

2 |

|

| Common Themes |

11 |

3 |

2 |

| Habits of Mind |

16 |

4 |

4 |

| Total |

63 |

37 |

33 |

Creating Related Content and Skill Benchmark Ideas

We spent an additional 2.5 months analyzing the Benchmarks in six related chapters: Chapter 1 — The Nature of Science; Chapter 3 — The Nature of Technology; Chapter 9 — The Mathematical World; Chapter 10 — Historical Perspectives; Chapter 11 — Common Themes; and Chapter 12 — Habits of Mind. We thought naively that, while the focus of the CIPS curriculum would be on the physical science content Benchmarks, we would also be able to meet many related benchmarks at the same time, as shown in the second column of Table 2 above.

We spent long hours discussing these related benchmarks ideas. The middle-school teachers were especially enthusiastic about the related mathematics and habits-of-mind ideas. We had to decide which related benchmark ideas we could realistically meet. So we first estimated the number of instructional hours available to teach science in a middle school. Out of the 36-week school year, the middle-school teachers estimated that 3-4 weeks are used testing students, teaching special topics required by districts, and for interruptions like assemblies and field trips. This leaves about 32 weeks of 45- to 50-minute periods to teach science, about 120 to 130 instructional hours.

Table 3. Example from Decision Matrix for Related Benchmarks

| Benchmark Ideas |

Comments |

Units/Criteria |

|||||

|---|---|---|---|---|---|---|---|

| 1 |

2 |

3 |

4 |

5 |

6 |

||

| 9B6-8#3: Graphs can show a variety

of possible relationships between two variables. As one variable

increases uniformly, the other may: |

Criteria III Possibilities. Position -Time for constant speed [Unit 2]; #Batteries - Current [Unit 4]; Mass - Volume [Unit 5]; Mass dissolved -volume [Unit 5 or 6]; force air - area [Unit 5]; Pressure-Temperature [Unit 5] Most phenomena in Unit 5. Not enough instructional time to meet content benchmarks and Criteria IV and V in this unit. |

III |

III |

III IV V |

|||

| As one variable increases uniformly,

the other may: |

Criteria III Possibilities. Position -Time for speeding up and slowing down [Unit 2]. This is not enough variety of phenomena to meet this graphing benchmark idea. |

III |

|||||

| As one variable increases uniformly, the other may (3) get closer and closer to some limiting value [e.g., y=mx-n

+ b, |

Criteria III Possibilities. Grav. Force-distance [Unit 2]; Shadow size-distance, and Intensity - Distance [Unit 3]; Pressure-volume at constant T [unit 5] 1. The two possible examples from Unit 3 are not closely linked to the content benchmarks — would take considerable additional instructional time. 2. There is not enough instructional time in Unit 3 or 5 to meet content benchmarks and Criteria IV and V in these units. |

III |

III IV V |

III IV V |

|||

Next we estimated how much instructional time it would take to teach each unit. Then we reviewed the Project 2061 Instructional Criteria to estimate the amount of time required to meet a benchmark idea. This is a difficult estimate because some ideas are simple and can be met in little time, while other ideas are abstract and associated with many misconceptions, and would take many days of instruction to meet. Some efficiency can be gained, however, when related ideas and content ideas are taught together. Our estimated average was about 45 minutes per idea. This estimate limited us to about 160-170 total benchmark ideas. Since we already had (at least) 120 content benchmark ideas (20 Benchmarks), we could only meet about 45 related benchmark ideas.

As developers, we felt responsible for providing a coherent set of well-sequenced activities and units to meet the physical science content benchmarks in our one-year time frame. If we included too many related benchmarks from other chapters, we would risk having teachers rush through the curriculum activities and leave inadequate time for students to make sense of the content benchmarks (Instructional Criteria IV and V). To decide which of the related benchmark ideas we could reasonably meet, we made a large matrix of the related Benchmark ideas versus our proposed units. An example of this matrix is shown on the previous page (Table 3). We first used Instructional Criteria III, Engaging Students with Relevant Phenomena, to brainstorm in which content units examples of the related benchmark ideas might be introduced. Then we discussed how and in which unit(s) Criteria IV, Developing and Using Scientific Ideas, and Criteria V, Promoting Student Thinking about Phenomena, Experiences and Knowledge, might be accomplished. Finally, we considered the instructional time this would take and whether there was enough time to meet the content benchmarks ideas as well as the related benchmark ideas.

Not surprisingly, we very reluctantly decided that there is not enough instructional time in one year to meet most of the related benchmark ideas. In the end, we selected 45 ideas from 17 benchmarks that we thought we could meet (see the third column of Table 2). We decided to keep track of the related benchmark ideas that we address in the curriculum, but do not meet. During the last year and a half of testing and revising our curriculum in classrooms, we have found that it takes middle-school students longer than expected to complete activities based on the Instructional Criteria. So the number of benchmark ideas we are able to meet keeps decreasing, as shown in the fourth column of Table 2. Occasionally we find that in meeting one set of related benchmark ideas, we are also meeting another benchmark idea we did not anticipate meeting.

Incorporating Project 2061 Instructional Criteria in CIPS Pedagogy

Since the analysis of the benchmarks took much longer than expected, we had only one month to discuss together how to incorporate the Project 2061 Instructional Criteria in our CIPS units. We were worried about keeping to our proposal timeline and we had only a short time left to write draft units to be tested the following academic year.

We used the Instructional Criteria to modify the learning cycle that two of the project directors had developed for teaching pre-service elementary teachers and high-school physic students in a previous NSF project (Constructing Physics Understanding or CPU Project). First we considered Criteria I.3, Justifying Activity Sequence — Does the material involve students in a logical or strategic sequence of activities (versus just a collection of activities)? We defined the referent for "logical or strategic sequence of activities" to be the middle-school students, not us (the developers) with our expert perspectives of physics and chemistry. In other words, we knew from the research literature on student learning in science (and from our own teaching experiences) that a "logical" sequence of activities for an expert scientist is not usually logical to novice (beginning) students. For example, a logical approach to the small particle model of matter parallels the historical approach of building evidence for the existence of atoms and molecules — the laws of definite and multiple proportions, Brownian motion, and so on. The research evidence indicates that this approach is not effective for middle-school (and most high-school) students (for several different reasons). Consequently, many students graduate from high school chemistry with many misconceptions about the small-particle model.

We decided to develop strategic sequences of activities by using the research literature about students' prior knowledge (including misconceptions) as a starting point, and the benchmarks ideas as the ending point in each of our learning cycles. We planned to design each activity in a learning cycle to help students along a learning trajectory from their initial ideas to the benchmark ideas. We used the Instructional Criteria to determine some required characteristics of the learning trajectory. By considering each Instructional Criteria in turn, we modified the CPU learning cycle for the CIPS Project. Each unit in CIPS consists of 2 to 4 learning cycles, and each learning cycle usually targets a small set of related benchmark ideas (about 1 - 4 ideas). The CIPS learning cycle has gone through many modifications in the last two years. Our current version of the four types of lessons included in a learning cycle is described below.

Our First Ideas. A two-day lesson designed to elicit students' prior knowledge about the targeted benchmark ideas. A problem is posed and cooperative teams of students usually predict, observe, and present their ideas about the relevant situation or event in the problem. Occasionally teams explain everyday events.

Developing Our Ideas. A strategic sequence of 4-6 lessons designed to build on students' prior knowledge and help them make sense of the set of targeted benchmark ideas. A development lesson usually "targets" one benchmark idea. That is, each lesson is designed to help students make sense of one benchmark idea. Occasionally a development activity is designed to review required prerequisite knowledge. There are two types of development lessons that we call "constructing lessons" and "presenting lessons." Constructing lessons are used when the targeted benchmark idea is simple enough to be constructed and tested by students through hands-on experiences. Presenting lessons are used when the targeted benchmark idea is too abstract for students to construct and test the idea through hands-on experiments.

Putting It All Together. In this lesson teams compare their initial ideas with the benchmark ideas they have learned in the development activities, and any technical terms are introduced at this point. The reading and/or teacher models how the benchmark ideas can be used to solve the original problem posed in the first lesson, then teams are guided as they practice using the benchmark ideas to solve part of original problem or a very similar problem.

Idea Power! At least two lessons where students practice using the benchmark ideas to solve a real-world problem. The first lesson is usually more guided than the second.

To make sure that we were incorporating all of the Instructional Criteria in our learning cycle, we constructed a table to show in which lesson activities the Instructional Criteria are commonly addressed. The following two pages show our current version of this table.

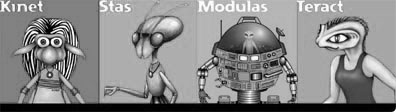

Another aspect of the instructional design we discussed was what motivational story line we could use for the curriculum. We decided to represent the curriculum themes in a science-fiction story line involving four teenage Aliens from a United Federation of Planets. The first contact policy of the Federation was to send representative teenagers to school on the new planet.

Each alien represented a different theme:

- Teract and Interaction - How are objects acting on or influencing each?

- Modulas and Systems - What are the inputs and output of the system?

- Kinet and Energy - What's changing?

- Stas and Conservation - What stays the same?

CIPS Lessons

Description of Lesson Activities

Some Common AAAS Criteria Addressed

Unit Overview (Teacher's Guide only)

Includes targeted benchmark ideas for unit; how taught in each cycle.

II.1 Prerequisite Knowledge/Skills

II.2 Alerting Teachers to Commonly Held Ideas

I.3 Justifying Activity Sequence

Cycle Overview (Teacher's Guide only)

Includes prerequisite knowledge/skills, targeted benchmark ideas for cycle;

commonly held student ideas, how ideas are taught in cycle.

Unit/Cycle Introduction

Science fiction story line to motivate learning of targeted benchmark ideas.

I.1 Conveying Unit Purpose

Our Initial Ideas (Elicitation of Student's ideas related to targeted Benchmark ideas)

Purpose

Relates cycle key question to science fiction story line

I.1 Conveying Cycle Purpose

I.2 Conveying Lesson Purpose

We think . . .

Problem (describe, explain or predict) posed about situation(s) or event(s).

Partners or teams discuss and write down their ideas (and reasons).

II.3 Assisting Teacher in Identification of Own Students' Ideas

V.2 Encouraging Students to Explain Their Ideas

Explore Your Ideas

Teams do an experiment (or participate in class demonstration). They discuss

their ideas about questions posed and write/draw ideas on a presentation board.

II.4 Addressing Students' Commonly Held Ideas

III.1 Providing Variety of Phenomena

III.2 Providing Vivid Experiences

Our Class Ideas

Teams present ideas to class. Class members ask clarifying questions. Teacher

helps summarize Class Initial Ideas.

V.2 Encouraging Students to Explain Their Ideas

Developing Our Ideas - Constructing Type

Purpose

Review of what has been learned so far and key question of what needs to be

learned next.

I.2 Conveying Lesson Purpose

We think . . .

Elicits students ideas about relevant situation(s) or event(s) related to

targeted benchmark idea

II.3 Assisting Teacher in Identification of Own Students' Ideas

V.1 Encouraging Students to Explain Their Ideas

Explore Your Ideas

Students explore situation(s) or event(s) through hands-on activity, class

demonstration(s), or consideration of known every day event(s).

II.4 Addressing Students' Commonly Held Ideas

III.1 Providing Variety of Phenomena

III.2 Providing Vivid Experiences

IV.2 Representing Ideas Effectively

Make Sense of Your Ideas

Series of questions to help students relate observations to targeted benchmark

idea.

V.1 Encouraging Students to Explain Their Ideas

V.2 Guiding Interpretation and Reasoning

Reflect on Your Ideas (not every lesson)

Writing activity for students to reflect on what they have learned.

V.3 Encouraging Students to Think About What They Have Learned

Developing Our Ideas - Presenting Type

Purpose

Review of what has been learned so far and key question of what needs to be

learned next.

I.2 Conveying Lesson Purpose

Introduction to Targeted Benchmark Idea

Reading, demonstrations, and class discussion of targeted benchmark idea.

III.1 Providing Variety of Phenomena

V.2 Guiding Interpretation and Reasoning

IV.2 Representing Ideas Effectively

You Try It!

Students explore additional situations and events not used to present the

idea.

III.2 Providing Vivid Experiences

IV.1 Encouraging Students to Explain Their Ideas

IV.4 Providing Practice

Reflect on Your Ideas (not every activity)

Writing activity for students to reflect on what they have learned.

V.3 Encouraging Students to Think About What They Have Learned

Putting It All Together (summarize benchmark ideas learned, introduce how to use ideas)

Consensus Ideas

Compare ideas learned with class initial ideas.

V.3 Encouraging Students to Think About What They Have Learned

How Do Scientists Represent These Ideas

Reading/discussion of technical terms for targeted benchmark ideas.

IV.1 Introducing Terms Meaningfully

How Do Scientists Use These Ideas

Reading and/or teacher modeling of how to use benchmark ideas to solve original

problem posed in Our Initial Ideas lesson.

IV.3 Demonstrating Use of Knowledge

You Try It!

Guided (scaffolded) practice of using benchmark ideas to solve part of original

problem or very similar problem.

IV.4 Providing Practice

Idea Power! (students apply what they have learned to solve at least two problems)

Situation and Problem Statement

Problem statement in form: Use your consensus ideas to describe (or explain,

or predict) something about real-world situation or event.

IV.4 Providing Practice

V.3 Encouraging Students to Think About What They Have Learned

Solution (includes evaluating solution)

Sometimes involves experiment. Students follow four-step problem solving strategy

to solve problem.

The final aspect of an instructional design we discussed is how to teach the content benchmarks and related benchmarks that we were not teaching within a single unit. Only two of the project directors had any experience developing units based on a learning cycle. None of the project directors had experience developing activities to teach the energy benchmark ideas, the themes of interaction and systems, the nature of science benchmarks, and many of the habits of mind benchmarks. Because of lack of time and expertise, we decided that one author would struggle to introduce all of these ideas in an introductory unit. This left the remaining authors free to learn how to develop units based on the CIPS learning cycle for benchmarks ideas with which they were more comfortable.

Looking back on our process, we should have spent an additional 2-3 months together developing and writing a draft of the first unit and part of a content unit. This would have allowed time to develop the necessary understanding of the Instructional Criteria and what would be required to fully incorporate the criteria in our CIPS learning cycles. As it was, we stopped with different levels of understanding of the Instructional Criteria based on our past experiences. For example one project director, who had no experience writing lessons based on a learning cycle, had difficulty with Criteria II (Taking Account of Student Ideas), particularly with writing activities that elicit students' ideas. Another project director had difficulty with Criteria III (Engaging Students in Relevant Phenomena). All authors had difficulty with Criteria IV.3, Demonstrating the Use of Knowledge, because of our lack of experience teaching middle-school students. The fact that we were not "all on the same page" with respect to the Instructional Criteria ultimately cost us much time and effort, as described in the next section.

Importance of Ongoing Formative Evaluation of Materials

The ongoing testing of draft materials with students, observations of classrooms, and teacher feedback have proved to be an invaluable and indispensable part of the development of the CIPS materials. Below is description of some things we learned through this kind of ongoing formative evaluation about incorporating some of the Project 2061 Instructional Criteria.

I. Providing a Sense of Purpose.

-

Conveying unit purpose. Does the material convey an overall sense of purpose and direction that is understandable and motivating to students?

-

Conveying lesson purpose. Does the material convey the purpose of each lesson and its relationship to others?

Despite our attempts to meet these Criteria in CIPS materials, the teachers and observers reported that students (and often the teachers) were "getting lost." There were three reasons for this. In our first draft units, the purpose for each cycle and lesson activity was given in a short introductory dialog between the aliens in our science fiction story line, which ended in a key question that was addressed in the cycle or lesson. However, middle-school students did not like our alien story line. [They did not believe that teenagers from planets with space travel would have any questions about science or difficulty learning science.] As a result, these introductions were often read very quickly and not discussed. Moreover, we had not included in the teacher's guide the importance of reading and discussing the introduction. We decided to change the story line (aliens are now young adults) and reduce the role of the aliens in the activities. So far this has proved successful. We are now getting student comments about wanting to know more about the aliens.

We also learned through the observations and feedback that the key questions were not very good in our first drafts. Students and teachers did not always understand the questions or see the connection between the question and the activity. In addition, we did not have students discuss the key question and the answer at the end of each activity. Finally, the activities were often too long and involved and with too many questions (see Criteria V below). This contributed to students' losing the sense of purpose for the activity.

-

Justifying activity sequence. Does the material involve students in a logical or strategic sequence of activities (versus just a collection of activities)?

All authors had difficulty implementing this part of the CIPS pedagogy. For example, we found it almost impossible to give up our "favorite" activities or approaches to teaching particular content ideas in our first drafts of the units. [By the second and third drafts, we were getting much better.] We also found it difficult to create new activities that really targeted the benchmark ideas, particularly for the energy, nature of science, and habits of mind benchmarks. Not surprisingly, the ongoing classroom observations and teacher feedback indicated that our activity sequences did not always prove to be "strategic."

Much of our revisions and classroom testing at this stage of our development process involves what we are calling "tightening" the cycles to make them more focused and efficient.

A related issue we faced was providing a sense of curriculum purpose and involving students in a logical and strategic sequence of cycles and units. The classroom observations and teacher feedback indicated that we were inconsistent in carrying through the organizing themes of the curriculum (interaction, systems, energy and forces) in our units. Based on this feedback we slowly evolved a strategic sequence within a unit, as outlined below.

-

Students first investigate the interaction effects for the interaction type(s) introduced in the unit. Many of the content benchmark ideas deal with interaction effects. For example, in light interactions "seeing" is the interaction effect when light energy interacts with the human eye (4F6-8#2 Benchmark idea). For thermal interactions, a change in temperature is an interaction effect when a hotter object interacts with a colder object that it is touching.

-

When appropriate (i.e., for the content benchmark ideas that deal with energy or force), students describe the interaction type(s) in terms of energy transfers and transformations and/or forces. For example, different light energies transferred from a source to the human eye (receiver) are "seen" as different colors (4F6-8#3 Benchmark idea). During the gravitational interaction, both objects (masses) pull on each other (4G6-8#1 Benchmark idea). For the gravitational interaction, energy must be transferred into the system to pull the two objects (masses) apart. This energy, which is stored in the system, is called gravitational energy (4E6-8#4 Benchmark idea).

-

For some content benchmarks, students investigate the characteristics of the objects and events that determine the strength or magnitude of the interaction. For example, the strength of the gravitational interaction depends on the distance between two objects. The farther the objects are apart, the weaker the interaction (4G6-8#1 Benchmark idea).

-

II. Taking Account of Student Ideas.

-

Attending to prerequisite knowledge and skills. Does the material specify prerequisite knowledge/skills that are necessary to the learning of the benchmark(s)?

We found the Project 2061 strand maps and the grades 3-5 Benchmarks to be very helpful in identifying prerequisite knowledge and skills. Nevertheless, before we tested the draft units with students, it was difficult to determine how much time it would take to either review or teach the prerequisite knowledge and skills. For example, in the Interactions and Matter unit we found that middle-school students are confused about how to compare the "amount" of a substance in different containers or in different phases. Most 8th graders do not have the prerequisite ideas that surface area is determined by counting the unit squares that cover the unknown surface, and volume is determined by counting unit cubes that fit inside the object (Benchmark 9C3-5#1), and mass is determined by counting unit masses that balance the unknown object. In revising the unit, we had to include a review of these ideas

-

Alerting teacher to commonly held student ideas. Does the material alert teachers to commonly held student ideas (both troublesome and helpful)?

-

Assisting teacher in identifying own students' ideas. Does the material include suggestions for teachers to find out what their students think about familiar phenomena related to a benchmark before the scientific ideas are introduced?

-

Addressing commonly held ideas. Does the material attempt to address commonly held student ideas?

Our pedagogy required us to be steeped in the research literature on commonly held student ideas because these ideas are the starting point for our learning cycles. We found that the existing research literature is a necessary starting point, but is not sufficient for predicting what ideas middle-school students find easier and what ideas they find more difficult to learn in CIPS. Our observations and teacher feedback suggest that this is due to: (a) our unconventional approach to teaching some of the physical science benchmark ideas; and (b) cumulative learning from previous units.

For example, in CIPS we teach the small particle model of gases before we teach the benchmark ideas about the process of heating and cooling a gas. Students have done collision experiments with steel balls, seen animations and simulations of what happens when faster-moving gas particles collide with slower-moving particles, and described the collision interaction in terms of energy transfer. So when we get to heating and cooling gases, we find that most CIPS students do not start with the commonly held idea that cold or both cold and heat are transferred in a thermal interaction. Similarly, we develop the small particle model of gases before the particle model of liquids and solids, and do not investigate phase change until the end of the unit. We found that most students do not start the learning cycle on the particle model of solids and liquids with the commonly held idea that solid and liquid particles have the same properties as solids and liquids (particles of solids are cold, hard, and rigid while particles of liquids are warmer, larger, and softer).

On the other hand, we are also finding that the cumulative learning from previous units lead to student ideas in later units that we had not predicted — what Jim Minstrell would call ideas higher up the ladder towards the scientific idea. This is particularly true on the Benchmark Ideas about energy transfers and transformations. These ideas can only be determined through ongoing research in classrooms implementing a curriculum based on the Benchmarks and Instructional Criteria.

III. Engaging Students in Relevant Phenomena.

-

Providing variety of phenomena. Does the material provide multiple and varied phenomena to support the benchmark idea(s)?

-

Providing vivid experiences. Does the material include activities that provide firsthand experiences with phenomena, when practical, or provide students with a vicarious sense of the phenomena when not practical?

Classroom observations and feedback indicate mixed results for this criteria. About 50% of our lessons involve students in hands-on activities. Not surprisingly, we have the most difficulty providing relevant phenomena for the energy benchmarks. We are currently experimenting with videos and the computer simulations we designed for each Unit. These simulations can provide students with a vicarious sense of phenomena when actual experiments are difficult or tedious to perform. For example, in the electromagnetic unit students do one hands on experiment to determine that a compass is deflected near a current carrying wire. The computer simulation allows students to "observe" the compass deflection in a variety of different circuits.

IV. Developing and Using Science Ideas.

-

Introducing terms meaningfully. Does the material introduce technical terms only in conjunction with experience with the idea or process and only as needed to facilitate thinking and promote effective communication?

This criterion was met in our pedagogy by not introducing technical terms until the Putting It All Together lesson towards the end of each cycle. Observations and teacher feedback did indicate, however, that teachers had difficulty not using the technical terms in their teaching out of habit.

-

Representing ideas effectively. Does the material include accurate and comprehensible representations of scientific ideas?

For each CIPS unit we designed at least one computer simulation that includes a representation of the some of the target benchmark ideas. For example, the Interactions and Matter unit includes a simulation of ideal gas particles in a container with fixed, rigid walls or a container with one movable wall. Students can run the simulation to see what happens to the gas particles when the container is heated or cooled. They can also see what happens to the density of the gas particles when more gas is pumped into the container or some gas is pumped out of the container.

In addition, each simulation has the same "energy tool." Students can select a system of interest and a bar graph appears for energy input (transfer of energy into the system), energy output (transfer of energy out of the system), and changes in energy within the system (energy transformations). This energy tool is used to teach the conservation of energy.

We have not had enough classroom experience with the simulators to evaluate their effectiveness. We have experimented with a several diagrammatic representations, for example an energy diagram to help students figure out and represent the energy inputs, outputs and transformations within a system. Class observations and teacher feedback suggest that this representation may be effective, but more observations are needed.

-

Demonstrating use of knowledge. Does the material demonstrate/model or include suggestions for teachers on how to demonstrate/model skills or the use of knowledge?

This criterion was the most difficult for our development team to understand and implement. This was due, in part, to the lack of middle-school teaching experience of the co-project directors. It was also due to the lack of time in the first year to discuss and try different ways to meet this criterion.

The first observations and teacher feedback made it clear that modeling is absolutely essential for middle-school students to be able to apply what they have learned to everyday situations. We also learned that most teachers need time to learn how to model. We found that if we do not include modeling in the student materials, teachers will often skip the modeling suggested in the teacher's guide. We are still trying to improve the modeling in the CIPS units.

-

Providing practice. Does the material provide tasks/questions for students to practice skills or using knowledge in a variety of situations?

In principle, we were meeting the criterion of providing tasks/questions for students to practice skills or using knowledge in a variety of situations in our application activities for each cycle. In practice, however, the observations and teacher feedback indicated that we were not meeting this criterion because each unit was taking much longer to teach than expected.

Additional Lesson Learned

Other Prerequisite Skills

As authors we all underestimated the time it takes middle-school students to complete activities based on the Instructional Criteria. Therefore our units were too long and needed extensive revisions. Moreover, we were unable to test all of the units in the academic year, which put is further behind in our development timeline. We discovered two related reasons for the longer-than-expected time to complete the units. First, we did not include explicit teaching of cooperative grouping skills in the student material or teacher's guide of our first draft units because of the large amount of time and effort required to do this. Instead, we gave our teachers a book about cooperative grouping and asked them to read the book and try to include some cooperative grouping skills in their teaching. This proved to be a big mistake. Middle-school students do not know how to work as a team to complete an activity or how to "discuss" ideas, and our teachers did not have sufficient knowledge about cooperative grouping to deal with the numerous difficulties that arose. We learned that cooperative-grouping skills must be explicitly included in the student materials and teacher's guide from the very beginning. Otherwise, valuable learning time is wasted both during the activities and in reteaching the content.

Second, we found that middle-school students do not have much previous experience reflecting on and writing down their ideas, so they took a very long time to do this. Moreover, they disliked having to write down their ideas — this was not what they "expected" in a science class. We had two choices. We could either drastically reduce the number of Benchmark ideas we would meet in the course, or find some way to decrease the amount of writing time without compromising the Instructional Criteria. We are still experimenting with this issue.

Student/Parent Reaction.

The introductory unit, in particular, was much too long, so the students and their parents did not believe they were learning any "real" science. Even after the first two content units (Interaction and Motion and Light Interactions), some students and their parents did not think the students were learning enough science. There were two reasons for this. First, the teachers found that the students who usually do not perform well in science (and other classes) perform very well with CIPS. The students who complained the most were those who had been very successful in past courses memorizing the content. At one site, however, we found that when the parents saw the curriculum and what students were asked to do and think about (at parent-student conferences), they agreed with the goals of the CIPS curriculum and the complaints died away.

Second, the curriculum is almost too successful at helping the majority of students to understand and be able to use their physical science knowledge! Students and parents expect physical science to be very difficult to learn. When it is not difficult, they assume that the curriculum is a failure. We are currently experimenting with different ways to enhance students' understanding of how much they have learned.

Teachers, students, and parents have strong expectations of what should happen in a science class. New curricula based on the Project 2061 Benchmarks and Instructional Criteria, which by definition do not meet these expectations, must be very creative and explicit about what is being learned and how it is being learned. Our experience reinforces the need to include professional development packages for teachers and manuals for parents to help them understand the goals and pedagogy of CIPS.

***

The lessons we have learned in the CIPS project are not new — they appear in the education research literature. But curriculum developers are also people who, like students, must learn by constructing their own meaning of what it means to develop a curriculum based on the Project 2061 Benchmarks and Instructional Criteria. If we want successful curricula, sufficient time and ongoing research must be built into curriculum projects for the developers to construct knowledge about the development process and improve their curricula.